The Importance of Addressing Patients’ Concerns Over AI

A new survey finds “significant discomfort” among patients about the use of artificial intelligence (AI) in health delivery. As questions and speculation about how ChatGPT (and similar emerging AI models) will impact daily life continue to dominate the news, we believe patients’ discomfort with AI will likely increase even further in the near term – and it is incumbent on hospitals and health systems to get out in front of the issue before it becomes a problem.

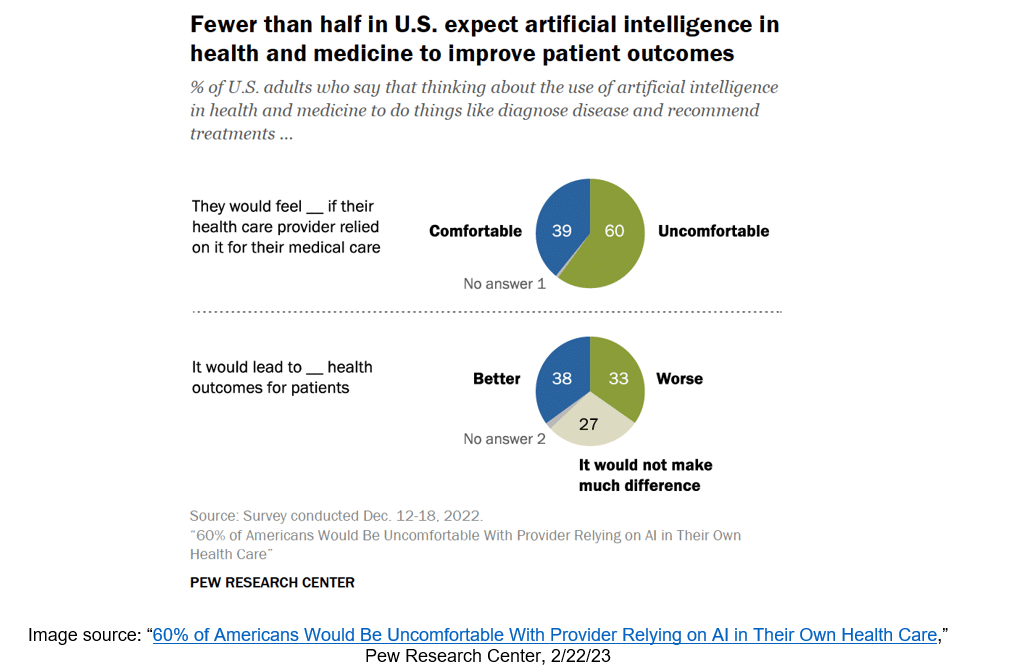

According to the survey from the Pew Research Center, “[60% of] U.S. adults say they would feel uncomfortable if their own health care provider relied on artificial intelligence to do things like diagnose disease and recommend treatments; a significantly smaller share (39%) say they would feel comfortable with this.” The authors add “these findings come as public attitudes toward AI continue to take shape, amid the ongoing adoption of AI technologies across industries and the accompanying national conversation about the benefits and risks that AI applications present for society.”

Why It Matters

We suspect much of the discomfort reported by survey respondents about AI in healthcare comes down to the difference between awareness and understanding. AI has been thrust into the national spotlight over the last few months in the form of the now infamous ChatGPT, with headlines dominating the news about how the model is capable of doing everything from writing college term papers to engaging in “unsettling” conversations with reporters. The result of this barrage of recent stories (which range from genuinely fascinating to undeniably creepy) is that for better or for worse, much of the general public’s perception about “AI” right now is narrowly confined to what they are reading about ChatGPT. However, as every hospital and health system knows, “artificial intelligence” is far broader (and far more mature) than just the emerging chatbot tools making headlines. The average patient likely has no idea how prevalent AI is in the current delivery of healthcare, let alone the important role it plays in improving efficiency, increasing automation, assessing risk, diagnosing disease, and predicting outcomes. Some hospitals and health systems may have well over one hundred algorithms used by clinicians to treat patients (e.g., the Braden Scale, the Schmid Fall Risk Assessment Tool, the Sequential Organ Failure Assessment score, etc.). These algorithms save lives and prevent injuries, but few patients are likely aware of them – and almost certainly do not realize they are AI.

The concerns expressed by survey respondents about AI in healthcare – or at least what they perceive to be “AI” – has the potential to create problems for providers, especially as misconceptions about AI build. Public health depends on patients trusting how their doctor reached a diagnosis; outcomes are contingent on patients following care plans and adhering to recommended courses of treatment from their care team.

Hospitals and health systems need to do their part to address any misconceptions about AI before it becomes a problem. Transparency and proactive patient communication are essential. A good starting point could be simply investing time to ensure that staff at various points in the patient journey are able to provide informed, accurate, and easy-to-understand answers about how the hospital or health system is using AI (or at least are able to direct the patient to someone who can). Developing a brief FAQ on the organization’s website or proactively reaching out to patients via the hospital’s existing communication channels can go a long way towards helping them understand what AI is (and what it is not) and the ways the hospital or health system is currently leveraging AI.

Headquarters

400 E. Diehl Road

Suite 190

Naperville, IL 60563

Downtown Chicago Office

980 N. Michigan Avenue

Suite 1998

Chicago, IL 60611

Phone

1-800-680-7570